On Human Nature(s) 4: Kopfkino, Predictive Coding, and Emotion

Marc Green

This section continues the discussion of System 1 kludges. While those discussed in the previous section, affordances, heuristics and biases, and schemata, are widely known and commented upon, this section examines two expectation generators that are less widely appreciated, what I’ll call Kopfkino and predictive coding. The section also examines another System 1 kludge, emotion, which usually receives inadequate consideration when evaluating causation.

Kopftkino

In addition to seeing “real” images, humans often create phantom images in the head. Some pertain to what is going to happen in the future. A person going on a holiday might imagine himself lying on the beach, sipping umbrella drinks, etc. An athlete might visualize his performance prior to a competition. A fireman in a burning building might imagine a potential path through the fire to rescue an occupant (see Klein, Thunholm, & Schmitt, 2004). Since there is no simple English word for this phenomenon, I’ll label such mental preparations with the German word “Kopfkino,” which literally translates to head cinema (“Kopf” and “Kino”). It captures the notion that the person creates a little movie that runs through the head1.

Some (e.g., Moulton & Kosslyn, 2009) have argued that mental imagery such as Kopfkino acts much like schemata. Its main function is to create predictions of the future based on experience. In a sense, the brain is running a simulation of future events. This comports well with research (e., Klein, 1993) into recognition-primed decision (RPD), which finds that actors in high-stakes situations frequently employ such mental simulation prior to acting.

The benefits of Kopfkino are similar to those of schemata. It is often an effective performance enhancer that creates priming. It is a short-cut to gaining situational awareness and automatically sets expectation about the situation to be encountered and the response that will likely lead to a positive outcome. Like a script, it allows prediction of future events. For example, behavioral studies have shown that single episodes of imagery, generated for five seconds, can bias subsequent perception.

Last, Kopfkino can be either voluntary or involuntary. The voluntary type is likely to be a product of System 2. The person creates the movie by intentionally analyzing what is likely to happen. The involuntary type is more likely to be a case of System 1 automatically generating the images. Both System 1 and 2 generated mental images affect sensory processing in V1, primary visual cortex. This leads to the next expectation mechanism, predictive coding.

Predictive Coding

“Predictive coding” is a class of theories suggesting that the brain is constantly making predictions, testing those predictions against actual events, and then updating the predictions2 using something like a Bayesian statistical model3. On the surface, there is nothing new here as it simply says that humans learn from experience. However, predictive coding goes further. Recall that normal convention divides information sources into the dichotomy of bottom-up sensory or top-down cognitive. The control of behavior is then conceptualized as a sequential process. First, bottom-up information flows from the eyes to the brain. Most depictions of visual processing show this as a one-way flow. Next, higher top-town processes interpret the input and then make decisions about response.

Although various predictive coding models can differ in significant ways, they all see the standard sequential model as an oversimplification. Instead, they posit the presence of reciprocal connections between sensory and cognitive processes4. Predictive coding research using brain-imaging demonstrates that the while the visual system is sending information to the brain, the brain is also tuning the visual system. Higher cognitive processes create predictions which generate signals down to the visual system so that it is better able to process input (e.g., Rao & Ballard, 1999). That is, the prediction (expectation) is directly coded into the visual system itself. The brain then compares the expected sensory input to the actual sensory input to update the expectation.

There are competing theories about how this predictive coding occurs. Both assume that the brain efficiently attempts to modify its model of the world by focusing on the minimal amount of information, the error signal produced when an apparent prediction error occurs. Both theories suggest that the brain then suppresses the sensory system, but they differ in what is suppressed. The “sharpening hypothesis” (Kok, Jehee, & de Lange, 2012) says that if the bottom-up sensory signals are incongruent with prior expectations, then they are suppressed. Sensory neurons consistent with the prediction are more likely to fire. The presumed benefit of this sharpening is higher contrast because noise (uncertainty) in the sensory input information is reduced. In a sense, the top-town information biases the sensory system toward signaling the expected input. Conversely, the “dampening hypothesis” (e.g, Richter, Ekman, & de Lange, 2018) takes the opposite view. It says that the brain suppresses the sensory signals that are congruent with the predicted input. Unexpected input creates a stronger signal.

The two theories can be reconciled by assuming that sharpening and dampening occur in different circumstances (Rossel, Peyrin, & Kaufmann, 2023). When sensory conditions are good, sharp image, high contrast, and good visibility, then the predictive coding is likely to be accomplished through suppressing the expected input. When there is uncertainty due to poor sensory information, as for example in low visibility conditions, then sharpening would occur. The suppression of the unexpected input would be greater and the relative weight of the prediction would increase (e.g., Grush, 2004). The brain biases the percept more toward the predicted input. This effect is not a new suggestion. When discussing Signal Detection Theory, for example, I have already explained that bias increases when there is a low signal-to-noise ratio, i.e., when the sensory information contains a high noise level (Green, 2024).

The existence of predictive coding by sharpening has major implications for decision and action, especially when visibility is poor and time is short. For example, imagine a police officer on patrol at night. If he expects to encounter a suspect with a gun and sees something in the man’s hand, then both his cognitive processes and the visual system itself are pre-tuned to signal the percept that the object is a gun. Several other factors also influence this likelihood (see below). Lastly, predictive coding should presumably influence schema invocation by biasing scene perception.

Summary of Expectation Generation

Before leaving the subject of expectation generation, I stress two more points.

- Suppose a person acts based on System 1. If you later ask him why he did what he did, you might get an accurate response, but you will often get an answer that is mere rationalization that subconsciously created. Humans only have a limited ability to look back and introspect into System 1 decisions and behaviors. The person may be able to describe what happened in detail (although memory processes like normalization

are likely to occur), but that does mean that the recalled details are what controlled decision-making and behavior at the critical moment. The person is applying System 2 analysis to an action controlled by System 1. He probably believes the rationalization to be accurate, but that’s all it is—a rationalization; and

- To reiterate the critical point made earlier, System 1 is automatic, but this does not mean that it is involuntary in the sense that it is reflexive. There is little doubt that the vast majority of our physical behavior is voluntary in the sense that the brain sends an afferent command to the effector muscles. No one and nothing is forcing us to perform the action. But the action is controlled by cognitive decision-making processes occurring outside our awareness. Do not lump consciousness and voluntariness together because behavior can be both automatic and voluntary. Such a conceptual error often leads to counterfactual thinking, 20/20 hindsight, and misguided blame attribution. After all, the person could theoretically have done otherwise, which is likely true. However, this ignores the reality that most of our behavior is automatic and the processes that control it operate outside of awareness and volition.

Emotion

Suppose the actor is under threat and System 1 and System 2 have failed: System 2 is too slow to form a plan and System 1 has no learned schema/script to make predictions (RPD fails). I’ve already explained that this creates shock and confusion. What happens now? This is the next topic of discussion.

Humans have another System 1 kludge which is somewhat unlike those discussed so far—emotion. Affect is readily confused with emotion and is often used as a synonym. I’ve already mentioned the affect heuristic which is the feeling of pleasantness/unpleasantness attached to some object, event, or situation. The affect heuristic readily influences decision and is the reason that “buyers are liars.” In contrast, I use emotions to mean mental states such as anger, fear, anxiety, surprise, disgust, etc. As described above, failure of the current active schema can give rise to these emotions.

The role of emotion in decision-making has received little consideration until recently. However, some research has begun to appear, but it seldom reflects emotions that arise in circumstances where an actor might die in two seconds unless he does something. (Most studies typically use a weak fear-inducing stimulus such as a spider.) The reason is obvious—people cannot be placed in real life-threatening situations6. This means that there are few studies of decision-making in real life-and-death circumstances. However, there is sufficient research to make general predictions of how a person will respond when emotionally aroused, especially when under threat.

While Kahneman briefly includes emotions in his discussion of System 1, this is probably a mistake. Compared to other System 1 kludges that require learning and experience, emotions provide an even more primitive and faster solution to the need for circumventing the limitations of human information processing. This is why I suggest splitting off emotions as System 0, because it is even older and more deeply embedded than most System 1 mechanisms. Moreover, emotions do not seem to fit neatly into the bottom-up vs. top-down dichotomy. They are top-down because they originate in the brain as opposed to the senses, but "top-down" intuitively means originating in a high mental level. Obvious, this is not where emotions arise.

In a given situation, an actor may find himself without an applicable schema or the time to perform analysis, but he will be able to fall back to emotions as a guide to decision-making and behavior. Emotions get a lot of bad press as leading to irrational or otherwise unproductive behavior. However, emotional processing should be recognized for what it is: a solution to be employed when all the other, theoretically better behavioral guides have failed. Like other System 1 kludges, moreover, the criticism occurs in part because its failures draw more attention. Last, it is a last line of defense in life-threatening circumstances, as described below.

While emotions themselves are innate, System 0 can have both learned and innate triggers. An example of a learned trigger is the story of Albert and the white rat where through classical conditioning a person learns to associate an emotion with a previously neutral stimulus. The sound of a dentist drill is another common example.

A good example of an innate trigger is “looming.” When an object approaches the eye, its retinal image grows until it eventually fills the entire visual field—it has hit the viewer in the face. You can see this by simply holding your hand at arm’s length and moving it toward the face. The hand grows bigger and bigger until it fills the entire visual field when it’s at the eye and has hit you. In short, looming tells you whether an object is going to hit you. A rapidly looming object is going to hit you very soon. In other words, you are facing a physical threat.

Rapid looming of a nearby object elicits a defense reaction that short-circuits cognition. It is likely an innate predisposition since infants as young as three weeks old have been shown to make defensive responses to looming. Presumably, they are unable to perform cognitive processing so some deeply-wired mechanism must be operating directly on the sensory input. Physiological studies have identified looming detectors in the brain and defensive responses to looming have also been demonstrated in virtually all species tested right down to insects. It is a very primitive survival mechanism.

Three main factors determine when a looming object will hit you. One is speed. Faster approach speed creates faster looming. Another is size. The larger the object, the faster it appears to loom. However, the biggest factor is proximity. Objects also loom much faster when they are near. A person (large size) rushing out a nearby doorway (close proximity) will create fast looming and induce a strong emotional response. An additional factor is the degree of perceived threat. Viewers judge that threatening objects hit them sooner than nonthreatening one.

The threat created by nearby looming objects is also amplified when it falls within “defensive peripersonal space" (DPPS) (e.g., Bufacchi, Liang, Griffin, & Iannetti, 2016). DPPS is a protective area surrounding the body. Potentially harmful objects located within this space elicit stronger defensive reactions. Some conceptualizations of peripersonal space put it at 2-3 meters. However, DPPS is probably not a fixed size but becomes greater in response to a looming threat.

For present purposes, the most relevant research has studied emotions that arise from threat. When threat evokes emotions of surprise, anxiety, and fear, information processing, decision-making, and behavior are characterized by the following:

Automatic reaction. (System 1, not volitional). This automatic response may also be adaptive because it is fast and less impaired by stress. Recall, for example, an act need only trigger a motor schema for it to run off in stereotyped fashion. However, very extreme stress can disrupt even highly overlearned motor schema. Response may also be automatically influenced by stimulus-response compatibility and conflict.

Fast reaction. Perhaps the most powerful effect is increased speed. In a crisis, speed of response is the main concern. This means that an automatic defense reaction is likely to be triggered before any System 2 planning occurs. It may even occur prior to System 1 or activate because System 1 failed (no available schema). Rousseau succinctly summarized this in saying “I felt before I thought.”

Perceptual narrowing. By now, most people have heard of this phenomenon. It is often described as narrowing the attentional spotlight’s focus very tightly around the sightline so that the peripheral field is ignored—tunnel vision. However, perceptual narrowing is a much more general mental phenomenon. The viewer also narrows in the sense that he perceives fewer interpretations of the scene and considers few, simple alternative responses. The viewer sacrifices depth of analysis for speed. This is the general theme of System 0 behavior, trade off accuracy and complexity for speed.

Negative information is more salient and more heavily weighted. Decision makers under threat seek and most readily process negative information and then place more emphasis on that information. Shermer (2011) suggests that the bias toward negative information is a survival mechanism. In Signal Detection Theory terms, a person acting under uncertainty can make two types of error when judging whether an object/environment is a threat. One is a “false alarm,” where the judgment is that there is a threat, but in fact none exists. The other error type is a “miss,” where the judgment is no threat when in actuality one exists. The decision maker could suffer any number of false alarms, and the consequences would be minor. However, even one miss could be fatal. Negative information is then more useful because the consequences of a miss are potentially so great7. This emphasis on the negative is an exaggerated version of the “negativity bias” which commonly affects most people in even mildly threatening conditions.

More reliance on bottom-up information. When no pre-existing schema automatically surfaces, actors prioritize sensory information. However, the sensory information must be simple and processed with high speed.

Chaining is strongly promoted. Imagine that you are late for an important meeting and are feeling stressed. You show up at the building, approach the elevator bank, and see the up-arrow call button. What happens next? You are likely to push the button, but not once. The likely response is a rapid series of tap, tap, tap etc. In response to emotional stress, humans try to make their responses stronger in some way. If the response has a continuum, like muscle exertion, the actor will ramp up the strength used. When the response is discrete and cannot be amplified incrementally, however, the actor makes the response stronger by rapidly repeating it in a chain. This is why you repeatedly press the elevator button (and probably used some force to magnify the response) when late for the meeting. The important point is: the many button presses constituted a single response. Of course, if you stopped and thought, then you would know that pressing the button more than once has no added effect on calling the elevator. But you didn't stop and think—you just acted. System 0 had overridden System 2.

Response is keyed to low spatial frequency information. An example of the sensory information with high priority is the “low spatial frequencies” in a scene. Their prominence in decision-making is another reflection of the need for speed when under threat.

To explain what this “spatial frequency” means, I first lay some groundwork about basic visual processing. Images can be described in several ways. One is by the lines and edges that they contain. Another is by their “spatial frequency” content (e.g., Green, Corwin, & Zemon, 1976). Figure 1 is a classic image from the visual science literature showing the concept of spatial frequency. It is the number of stripes in a given area of visual angle (roughly distance across the page8). Across the horizontal axis, the stripes become thinner, i.e., the frequency goes from low to high. In the vertical direction, the contrast decreases from bottom to top. Note that the high spatial frequencies lose their apparent contrast more from bottom to top. This is because they are more difficult to see in low visibility conditions.9

Figure 1. Spatial frequencies increasing right while contrast decreases upward.

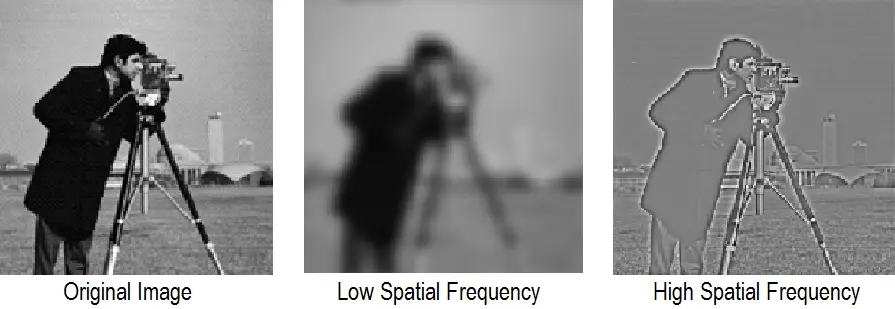

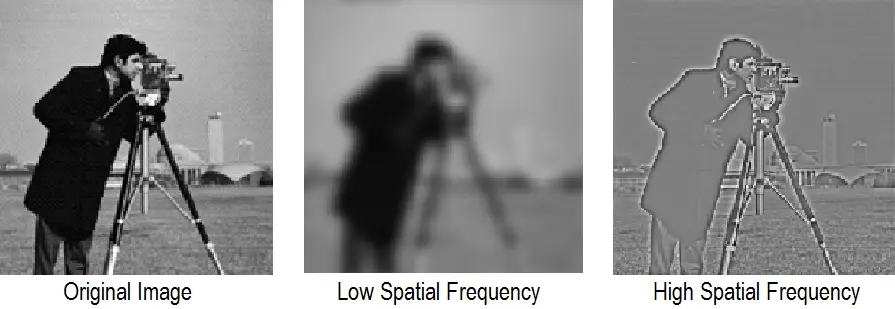

Mathematically, any image can be described as a collection of these patterns with different frequencies, orientations and contrasts10. That is, the image shown below in Figure 2 can be described by its spatial frequency content, its “spectrum.”

Figure 2 shows an original image that has been filtered to reveal only the low spatial frequencies (middle) and then only the higher spatial frequencies (right). That is, each of the rightward two images shows only a narrow spectrum of the original’s constituent spatial frequencies. It is obvious that the two images are complementary is some way. Roughly speaking, superimposing the two filtered images would approximately produce the original12. The lower frequency image is obviously blurry. Increasing spatial frequency reveals the finer detail, the edges that define shape.

Figure 2. A photographic image filtered to show the low and high spatial frequencies12.

Anyone who has used the edge sharpening function of an image processing program is attempting to magnify the high spatial frequencies to sharpen the edge detail and make thin lines more distinct. Conversely, the blurring feature removes high spatial frequency information. For present purposes, the TLDR is that low spatial frequencies reveal only a blurry impression of overall shape. The higher frequencies are needed to supply detailed information that create a crisp, sharp image and shape definition.

Under stress, actors rely more on the low spatial frequencies because this is the information that travels most rapidly through the visual system and produces the fastest reaction time (e.g., Vassilev & Mitov, 1976). They are also better at attracting attention. As a result, the actor’s first impression and response is determined by a blurred perception of overall shape. In good visibility, the higher, more detailed frequency bands soon catch up with the low bands. Under time pressure and stress, however, the actors base response on the blurry low spatial frequencies before there is enough detail to distinguish between objects of similar overall shape. In poor visibility, moreover, high spatial frequencies may never appear due to their lower apparent contrast and detectability. Unsurprisingly, under threat viewers make better form discriminations with lower spatial than with higher spatial frequencies (e.g., Lojowska, Mulckhuyse, Hermans, & Roelofs, 2019).

Contrast polarity is another factor that determines perception under threat. Figure 3 shows that contrast comes in two polarities, positive (light on dark) and negative (dark on light). In most normal scenes at night, the polarity depends on location of the light source. Positive polarity occurs when the light source is in front of the subject and negative occurs when the light source is behind the subject.

Figure 3. Positive contrast left and negative right. The bottom paired is blurred, rendering only low spatial frequency information.

The effects of polarity are obvious in the top pair of Figure 3. Negative contrast obscures details such as the man’s hands. This is why it is sometimes termed “silhouette” contrast. The bottom pair shows the same images filtered to remove high spatial frequencies. The blurring further degrades image quality and the hands are less distinct even positive contrast while the negative contrast loses even more detail.

Summary

This concludes the discussion of the kludges that humans use circumvent our limitations, acting in a bounded rationality with limited information, limited resources, and often, limited time. If there is a single theme that ties the kludges together, it is the tradeoff between ease and speed one hand and accuracy and complexity on the other. Table 1 is a very rough guide to the tradeoff:

Table 1. Tradeoffs among Systems 0, 1, and 2.

System

Speed

Accuracy

Situations

2

slow

very high

complex or novel when there is time for analysis

1

fast

high

routine that have been frequently encountered

0

very fast?

uncertain

fundamental surprise, especially under threat when speed matters

System 2 is the most accurate and can deal with the most complex problems, but it also the most resource intensive and the slowest. When possible tasks will usually be offloaded to System 1, which is good for routine situations. It is faster, uses little mental resource and still provides very high accuracy, although it can error in several ways. The affordances that objects offer can be beneficial but not always. Mental short-cuts usually work but not always. Schemata almost always work, but they holistic and there are several ways that they can go wrong, especially when unexpected circumstances change. Last, System 0 is always there as a final fall back when all else fails, especially when under the shock of fundamental surprise and/or threat. It uses little mental resource and operates on the most salient sensory information, but it is of course notoriously error-prone. It can be very fast, but only if there is a highly salient response. If the person is caught is something like an avoidance-avoidance conflict (see section 3), then speed can be slow. System 0 is better than nothing, especially as it is there as a protection against dire consequences. It works on the basis that it is better to be safe than sorry.

So far, I have presented a mostly theoretical discussion of the kludges. Except for a few examples, they have been disembodied from the flow of real behavior. The next section examines them operating in a real-world situation.

Next: Applying the Kludges in Case Analysis

Endnotes

1In German, it means a bit more. It suggests a mental movie that the person cannot stop from obsessively running.

2For present purposes, the terms “prediction” and “expectation” are synonyms.

3Statements such as “the brain is performing Bayesian inference” should be taken in the correct sense. It is easy to lapse into teleology, but the brain isn’t trying to do anything. It is a mechanism that has no end goal. It just does what is does. Maybe it can be casually described in terms of Bayesian inference, but that’s all it is—a description. This tendency toward teleology is a general phenomenon, especially in those who create mathematical models. In many domains, sources speak as if natural processes have a purpose or goal. Darwin’s work should have exorcised the influence of teleology in science, but apparently it did not.

4Although I prefer to avoid neuroscience, it is impossible when discussing predictive coding since the concept is so closely tied to physiological. Some suggest that the brain sends reciprocal signals to the visual cortex while others suggest that the signals go to the ventral system, which mediates object identification and localization. I have spared the reader the messy, and sometimes inconsistent, details of such research.

5When memories are recalled, they are sometimes automatically adjusted to fit the dominant schema. Often this means filling in missing information with assumed values. In many ways, memory is much like an internal perception and is similarly affected by schema-driven expectations.

6Although some have attempted to increase realism. For example, Nieuwenhuys & Oudejans (2010) used a methodology where police officers faced “suspects” who could fire soap cartridges that stung when they struck the officer.

7It works the same in science. An infinite number of positive results does not prove a theory. However, it takes only one negative result to disprove it.

8Visual angle is approximated as width/distance or more accurately as tan-1(width/distance).

9Note that the very thick, low spatial frequency stripes also fade quickly with decreased contrast. This is only true in static pictures such as the figure. When there is sudden movement or flashing, the low spatial frequencies become the most visible part of the spectrum. See this article, for example. At the same time, sudden movement or flashing decrease the detectability of high spatial frequency details.

10Strictly speaking, this is only true when waves are sinusoidal as in Figure 1.

11This isn’t quite true here because the images are bandpass filtered and not low and high pass filtered, so some frequencies will be missing. If you don’t know what this means, don’t worry about it.

12 Note that the original photo already has image artifacts, horizontal “jaggies.” The photo was doubtless taken off of a relatively of low resolution TV. The eye is especially sensitive to the jaggies because they are detected by "vernier" acuity. It is one of the eye's "hyperacuities" that are much better than normal resolution or Snellen acuity. A small degree of blurring might be used to reduce them in a process called antialiasing.

Emotion

Suppose the actor is under threat and System 1 and System 2 have failed: System 2 is too slow to form a plan and System 1 has no learned schema/script to make predictions (RPD fails). I’ve already explained that this creates shock and confusion. What happens now? This is the next topic of discussion.

Humans have another System 1 kludge which is somewhat unlike those discussed so far—emotion. Affect is readily confused with emotion and is often used as a synonym. I’ve already mentioned the affect heuristic which is the feeling of pleasantness/unpleasantness attached to some object, event, or situation. The affect heuristic readily influences decision and is the reason that “buyers are liars.” In contrast, I use emotions to mean mental states such as anger, fear, anxiety, surprise, disgust, etc. As described above, failure of the current active schema can give rise to these emotions.

The role of emotion in decision-making has received little consideration until recently. However, some research has begun to appear, but it seldom reflects emotions that arise in circumstances where an actor might die in two seconds unless he does something. (Most studies typically use a weak fear-inducing stimulus such as a spider.) The reason is obvious—people cannot be placed in real life-threatening situations6. This means that there are few studies of decision-making in real life-and-death circumstances. However, there is sufficient research to make general predictions of how a person will respond when emotionally aroused, especially when under threat.

While Kahneman briefly includes emotions in his discussion of System 1, this is probably a mistake. Compared to other System 1 kludges that require learning and experience, emotions provide an even more primitive and faster solution to the need for circumventing the limitations of human information processing. This is why I suggest splitting off emotions as System 0, because it is even older and more deeply embedded than most System 1 mechanisms. Moreover, emotions do not seem to fit neatly into the bottom-up vs. top-down dichotomy. They are top-down because they originate in the brain as opposed to the senses, but "top-down" intuitively means originating in a high mental level. Obvious, this is not where emotions arise.

In a given situation, an actor may find himself without an applicable schema or the time to perform analysis, but he will be able to fall back to emotions as a guide to decision-making and behavior. Emotions get a lot of bad press as leading to irrational or otherwise unproductive behavior. However, emotional processing should be recognized for what it is: a solution to be employed when all the other, theoretically better behavioral guides have failed. Like other System 1 kludges, moreover, the criticism occurs in part because its failures draw more attention. Last, it is a last line of defense in life-threatening circumstances, as described below.

While emotions themselves are innate, System 0 can have both learned and innate triggers. An example of a learned trigger is the story of Albert and the white rat where through classical conditioning a person learns to associate an emotion with a previously neutral stimulus. The sound of a dentist drill is another common example.

A good example of an innate trigger is “looming.” When an object approaches the eye, its retinal image grows until it eventually fills the entire visual field—it has hit the viewer in the face. You can see this by simply holding your hand at arm’s length and moving it toward the face. The hand grows bigger and bigger until it fills the entire visual field when it’s at the eye and has hit you. In short, looming tells you whether an object is going to hit you. A rapidly looming object is going to hit you very soon. In other words, you are facing a physical threat.

Rapid looming of a nearby object elicits a defense reaction that short-circuits cognition. It is likely an innate predisposition since infants as young as three weeks old have been shown to make defensive responses to looming. Presumably, they are unable to perform cognitive processing so some deeply-wired mechanism must be operating directly on the sensory input. Physiological studies have identified looming detectors in the brain and defensive responses to looming have also been demonstrated in virtually all species tested right down to insects. It is a very primitive survival mechanism.

Three main factors determine when a looming object will hit you. One is speed. Faster approach speed creates faster looming. Another is size. The larger the object, the faster it appears to loom. However, the biggest factor is proximity. Objects also loom much faster when they are near. A person (large size) rushing out a nearby doorway (close proximity) will create fast looming and induce a strong emotional response. An additional factor is the degree of perceived threat. Viewers judge that threatening objects hit them sooner than nonthreatening one.

The threat created by nearby looming objects is also amplified when it falls within “defensive peripersonal space" (DPPS) (e.g., Bufacchi, Liang, Griffin, & Iannetti, 2016). DPPS is a protective area surrounding the body. Potentially harmful objects located within this space elicit stronger defensive reactions. Some conceptualizations of peripersonal space put it at 2-3 meters. However, DPPS is probably not a fixed size but becomes greater in response to a looming threat.

For present purposes, the most relevant research has studied emotions that arise from threat. When threat evokes emotions of surprise, anxiety, and fear, information processing, decision-making, and behavior are characterized by the following:

Automatic reaction. (System 1, not volitional). This automatic response may also be adaptive because it is fast and less impaired by stress. Recall, for example, an act need only trigger a motor schema for it to run off in stereotyped fashion. However, very extreme stress can disrupt even highly overlearned motor schema. Response may also be automatically influenced by stimulus-response compatibility and conflict.

Fast reaction. Perhaps the most powerful effect is increased speed. In a crisis, speed of response is the main concern. This means that an automatic defense reaction is likely to be triggered before any System 2 planning occurs. It may even occur prior to System 1 or activate because System 1 failed (no available schema). Rousseau succinctly summarized this in saying “I felt before I thought.”

Perceptual narrowing. By now, most people have heard of this phenomenon. It is often described as narrowing the attentional spotlight’s focus very tightly around the sightline so that the peripheral field is ignored—tunnel vision. However, perceptual narrowing is a much more general mental phenomenon. The viewer also narrows in the sense that he perceives fewer interpretations of the scene and considers few, simple alternative responses. The viewer sacrifices depth of analysis for speed. This is the general theme of System 0 behavior, trade off accuracy and complexity for speed.

Negative information is more salient and more heavily weighted. Decision makers under threat seek and most readily process negative information and then place more emphasis on that information. Shermer (2011) suggests that the bias toward negative information is a survival mechanism. In Signal Detection Theory terms, a person acting under uncertainty can make two types of error when judging whether an object/environment is a threat. One is a “false alarm,” where the judgment is that there is a threat, but in fact none exists. The other error type is a “miss,” where the judgment is no threat when in actuality one exists. The decision maker could suffer any number of false alarms, and the consequences would be minor. However, even one miss could be fatal. Negative information is then more useful because the consequences of a miss are potentially so great7. This emphasis on the negative is an exaggerated version of the “negativity bias” which commonly affects most people in even mildly threatening conditions.

More reliance on bottom-up information. When no pre-existing schema automatically surfaces, actors prioritize sensory information. However, the sensory information must be simple and processed with high speed.

Chaining is strongly promoted. Imagine that you are late for an important meeting and are feeling stressed. You show up at the building, approach the elevator bank, and see the up-arrow call button. What happens next? You are likely to push the button, but not once. The likely response is a rapid series of tap, tap, tap etc. In response to emotional stress, humans try to make their responses stronger in some way. If the response has a continuum, like muscle exertion, the actor will ramp up the strength used. When the response is discrete and cannot be amplified incrementally, however, the actor makes the response stronger by rapidly repeating it in a chain. This is why you repeatedly press the elevator button (and probably used some force to magnify the response) when late for the meeting. The important point is: the many button presses constituted a single response. Of course, if you stopped and thought, then you would know that pressing the button more than once has no added effect on calling the elevator. But you didn't stop and think—you just acted. System 0 had overridden System 2.

Response is keyed to low spatial frequency information. An example of the sensory information with high priority is the “low spatial frequencies” in a scene. Their prominence in decision-making is another reflection of the need for speed when under threat.

To explain what this “spatial frequency” means, I first lay some groundwork about basic visual processing. Images can be described in several ways. One is by the lines and edges that they contain. Another is by their “spatial frequency” content (e.g., Green, Corwin, & Zemon, 1976). Figure 1 is a classic image from the visual science literature showing the concept of spatial frequency. It is the number of stripes in a given area of visual angle (roughly distance across the page8). Across the horizontal axis, the stripes become thinner, i.e., the frequency goes from low to high. In the vertical direction, the contrast decreases from bottom to top. Note that the high spatial frequencies lose their apparent contrast more from bottom to top. This is because they are more difficult to see in low visibility conditions.9

Figure 1. Spatial frequencies increasing right while contrast decreases upward.

Mathematically, any image can be described as a collection of these patterns with different frequencies, orientations and contrasts10. That is, the image shown below in Figure 2 can be described by its spatial frequency content, its “spectrum.”

Figure 2 shows an original image that has been filtered to reveal only the low spatial frequencies (middle) and then only the higher spatial frequencies (right). That is, each of the rightward two images shows only a narrow spectrum of the original’s constituent spatial frequencies. It is obvious that the two images are complementary is some way. Roughly speaking, superimposing the two filtered images would approximately produce the original12. The lower frequency image is obviously blurry. Increasing spatial frequency reveals the finer detail, the edges that define shape.

Figure 2. A photographic image filtered to show the low and high spatial frequencies12.

Anyone who has used the edge sharpening function of an image processing program is attempting to magnify the high spatial frequencies to sharpen the edge detail and make thin lines more distinct. Conversely, the blurring feature removes high spatial frequency information. For present purposes, the TLDR is that low spatial frequencies reveal only a blurry impression of overall shape. The higher frequencies are needed to supply detailed information that create a crisp, sharp image and shape definition.

Under stress, actors rely more on the low spatial frequencies because this is the information that travels most rapidly through the visual system and produces the fastest reaction time (e.g., Vassilev & Mitov, 1976). They are also better at attracting attention. As a result, the actor’s first impression and response is determined by a blurred perception of overall shape. In good visibility, the higher, more detailed frequency bands soon catch up with the low bands. Under time pressure and stress, however, the actors base response on the blurry low spatial frequencies before there is enough detail to distinguish between objects of similar overall shape. In poor visibility, moreover, high spatial frequencies may never appear due to their lower apparent contrast and detectability. Unsurprisingly, under threat viewers make better form discriminations with lower spatial than with higher spatial frequencies (e.g., Lojowska, Mulckhuyse, Hermans, & Roelofs, 2019).

Contrast polarity is another factor that determines perception under threat. Figure 3 shows that contrast comes in two polarities, positive (light on dark) and negative (dark on light). In most normal scenes at night, the polarity depends on location of the light source. Positive polarity occurs when the light source is in front of the subject and negative occurs when the light source is behind the subject.

Figure 3. Positive contrast left and negative right. The bottom paired is blurred, rendering only low spatial frequency information.

The effects of polarity are obvious in the top pair of Figure 3. Negative contrast obscures details such as the man’s hands. This is why it is sometimes termed “silhouette” contrast. The bottom pair shows the same images filtered to remove high spatial frequencies. The blurring further degrades image quality and the hands are less distinct even positive contrast while the negative contrast loses even more detail.

Summary

This concludes the discussion of the kludges that humans use circumvent our limitations, acting in a bounded rationality with limited information, limited resources, and often, limited time. If there is a single theme that ties the kludges together, it is the tradeoff between ease and speed one hand and accuracy and complexity on the other. Table 1 is a very rough guide to the tradeoff:

Table 1. Tradeoffs among Systems 0, 1, and 2.

| System | Speed | Accuracy | Situations |

|---|---|---|---|

| 2 | slow | very high | complex or novel when there is time for analysis |

| 1 | fast | high | routine that have been frequently encountered |

| 0 | very fast? | uncertain | fundamental surprise, especially under threat when speed matters |

System 2 is the most accurate and can deal with the most complex problems, but it also the most resource intensive and the slowest. When possible tasks will usually be offloaded to System 1, which is good for routine situations. It is faster, uses little mental resource and still provides very high accuracy, although it can error in several ways. The affordances that objects offer can be beneficial but not always. Mental short-cuts usually work but not always. Schemata almost always work, but they holistic and there are several ways that they can go wrong, especially when unexpected circumstances change. Last, System 0 is always there as a final fall back when all else fails, especially when under the shock of fundamental surprise and/or threat. It uses little mental resource and operates on the most salient sensory information, but it is of course notoriously error-prone. It can be very fast, but only if there is a highly salient response. If the person is caught is something like an avoidance-avoidance conflict (see section 3), then speed can be slow. System 0 is better than nothing, especially as it is there as a protection against dire consequences. It works on the basis that it is better to be safe than sorry.

So far, I have presented a mostly theoretical discussion of the kludges. Except for a few examples, they have been disembodied from the flow of real behavior. The next section examines them operating in a real-world situation.

Next: Applying the Kludges in Case Analysis

Endnotes

1In German, it means a bit more. It suggests a mental movie that the person cannot stop from obsessively running.

2For present purposes, the terms “prediction” and “expectation” are synonyms.

3Statements such as “the brain is performing Bayesian inference” should be taken in the correct sense. It is easy to lapse into teleology, but the brain isn’t trying to do anything. It is a mechanism that has no end goal. It just does what is does. Maybe it can be casually described in terms of Bayesian inference, but that’s all it is—a description. This tendency toward teleology is a general phenomenon, especially in those who create mathematical models. In many domains, sources speak as if natural processes have a purpose or goal. Darwin’s work should have exorcised the influence of teleology in science, but apparently it did not.

4Although I prefer to avoid neuroscience, it is impossible when discussing predictive coding since the concept is so closely tied to physiological. Some suggest that the brain sends reciprocal signals to the visual cortex while others suggest that the signals go to the ventral system, which mediates object identification and localization. I have spared the reader the messy, and sometimes inconsistent, details of such research.

5When memories are recalled, they are sometimes automatically adjusted to fit the dominant schema. Often this means filling in missing information with assumed values. In many ways, memory is much like an internal perception and is similarly affected by schema-driven expectations.

6Although some have attempted to increase realism. For example, Nieuwenhuys & Oudejans (2010) used a methodology where police officers faced “suspects” who could fire soap cartridges that stung when they struck the officer.

7It works the same in science. An infinite number of positive results does not prove a theory. However, it takes only one negative result to disprove it.

8Visual angle is approximated as width/distance or more accurately as tan-1(width/distance).

9Note that the very thick, low spatial frequency stripes also fade quickly with decreased contrast. This is only true in static pictures such as the figure. When there is sudden movement or flashing, the low spatial frequencies become the most visible part of the spectrum. See this article, for example. At the same time, sudden movement or flashing decrease the detectability of high spatial frequency details.

10Strictly speaking, this is only true when waves are sinusoidal as in Figure 1.

11This isn’t quite true here because the images are bandpass filtered and not low and high pass filtered, so some frequencies will be missing. If you don’t know what this means, don’t worry about it.

12 Note that the original photo already has image artifacts, horizontal “jaggies.” The photo was doubtless taken off of a relatively of low resolution TV. The eye is especially sensitive to the jaggies because they are detected by "vernier" acuity. It is one of the eye's "hyperacuities" that are much better than normal resolution or Snellen acuity. A small degree of blurring might be used to reduce them in a process called antialiasing.

.

.